This article was published in Scientific American’s former blog network and reflects the views of the author, not necessarily those of Scientific American

Businesses are becoming dependent on artificial intelligence at a stunning pace. The number of enterprises using AI tripled in the last year alone, with 37 percent of organizations now using it, one survey found.

But the vast majority of projects businesses are looking to achieve through AI fail. One of the biggest reasons is, as VentureBeat reports, “unrealistic expectations.” Myths surround AI, and many people assume these technologies will do a better job than they ultimately do.

Sometimes, they can appear to be doing a fantastic job, when in fact they’re failing in profound ways. That’s what Facebook has been wrestling with. The social media platform’s technologies were manipulated to spread false news stories and influence the 2016 election. To combat this, the company recently announced the appointment of teams of humans to reclaim some of the work algorithms have been doing in selecting and recommending news stories to users.

On supporting science journalism

If you're enjoying this article, consider supporting our award-winning journalism by subscribing. By purchasing a subscription you are helping to ensure the future of impactful stories about the discoveries and ideas shaping our world today.

How can any business know whether its technologies are getting better results than humans would? I recommend they do what we did: set up a competition.

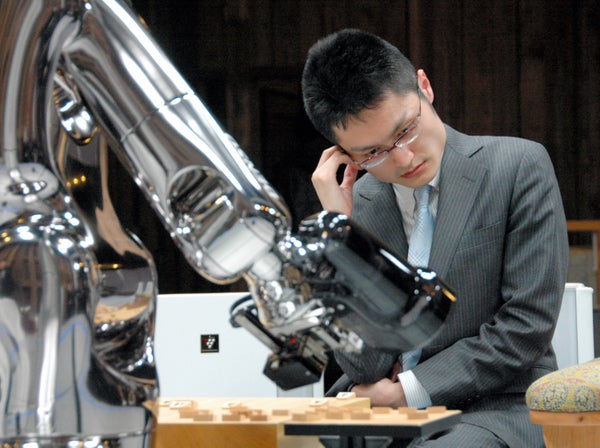

Just as IBM’s Deep Blue beat chess grandmaster Garry Kasparov, its Watson beat two Jeopardy champions, and an AI system built by DeepMind beat the world’s top AlphaGo player, I wondered, could our technology beat me, the founder and CEO?

magpie vs. me

I went up against magpie, the set of algorithms that my company, filtered, uses to recommend learning content to people at businesses who are looking to pick up new skills. What Spotify does for music and YouTube does for video, magpie does for corporate learning.

The challenge involved 200 Harvard Business Review articles. One slice of the work our algorithms carry out is to classify articles according to their value in helping someone learn useful skills. One of the most important aspects of this is tagging—applying certain labels to each article so that it will appear for users in the right places, at the right times.

Who did a better job? The short answer is that it was a blow out victory for magpie—but the one way in which I did better was important and instructive. There is no sense of schadenfreude here; in fact, our goal is to make magpie better so that it will beat me on even this metric next time.

When it came to discovering the right tags to stamp on an article and recognizing the native tags that HBR itself applies, magpie won emphatically. Not only were its results better and more accurate than mine, but I took two hours to do what magpie achieved in five seconds!

So where did I beat magpie? On “keytagging”—the term we use for determining the main thrust of each HBR article.

This is a crucial task. If one of our users is looking to learn about coding, and our engine provides the learner with an article that only mentions coding inconsequentially, the learner might not find value in the recommendation. In order to make a difference for the learner, our system needs to get the essence of the article right too; it needs to know that the article is really about coding.

This test served as a powerful reminder of the enduring importance of humans in curating anything. If you put blind faith in AI, leave humans out of the process and don’t engage in human-run quality control, your project is likely to fail.

Building better algorithms

After seeing these results, we set about “teaching” magpie how to do a better job of keytagging—and how to get better in other ways as well, since there was still room for improvement even in the ways it beat me. Next time we run the test (at the end of this year), we expect magpie to outdo me in understanding the main thrust of an article and win all the way down the scorecard.

Will this relentless advance of technology make humans obsolete? No. Just like with keytagging, there will—for the foreseeable future—be activities that people are better at. Building algorithms to better handle certain tasks frees up people to focus on other important roles in the company.

For example, by handing off parts of our recommendation system to AI, we’ve been able to commit more employee time to engaging with customers and prospects about what we offer, and why it works. Computers can’t yet synthesize and articulate complex concepts. Nor can they build human relationships.

A big part of the future of business and society in general is about humans and AI working together. The benefits are clear, and can be seen in similar head-to-head matchups.

“Centaurs,” in which a human and a computer from a single team, have been shown to outplay pure AI at chess. As MIT’s Journal of Design and Science put it, “humans & AIs are strong on different dimensions.” Working together, we can break new ground.