This article was published in Scientific American’s former blog network and reflects the views of the author, not necessarily those of Scientific American

On our most recent episode of our podcast My Favorite Theorem, my cohost Kevin Knudson and I talked with Sophie Carr, who works as a consultant “finding patterns in numbers,” as she describes it. She is also the World’s Most Interesting Mathematician, according to the Big Internet Math-Off, a fun competition hosted by the Aperiodical this past summer. (In January, we had Nira Chamberlain, 2018’s World’s Most Interesting Mathematician, as a guest on the show.)

You can listen to the here or at kpknudson.com, where there is also a transcript.

On supporting science journalism

If you're enjoying this article, consider supporting our award-winning journalism by subscribing. By purchasing a subscription you are helping to ensure the future of impactful stories about the discoveries and ideas shaping our world today.

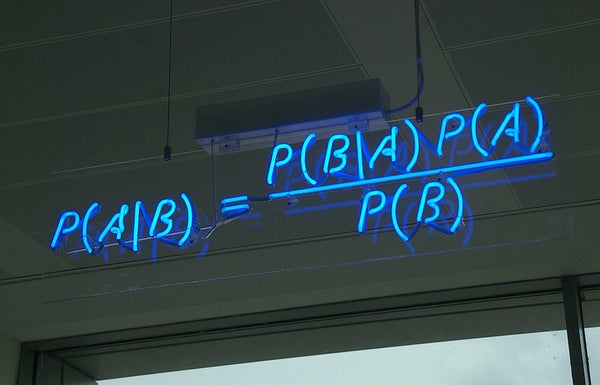

I was very glad to hear that Carr’s favorite theorem is Bayes’ theorem because I have long felt that I had insufficient appreciation for this theorem. Bayes’ theorem is a statement about conditional probability—the probability of an event given other known conditions. A classic example is the probability that you have a disease, given that you tested positive for it. The answer depends on the prevalence of the disease in the population and how accurate the test is—that is, how likely you are to test positive if you have it and how likely you are to test positive if you do not have it. If a disease is rare and the test misdiagnoses a large number of healthy people as having the disease, you may be unlikely to have the disease even if you test positive for it.

Bayes’ theorem quantifies those probabilities. It also forms the foundation of Bayesian statistics, one of the two main branches of statistics. (The other is frequentist.) A full explanation of the intricacies and relative merits of Bayesian and frequentist statistics is more of a can of worms than I want to open here, but they come from two different philosophies about what probability and uncertainty mean, and statisticians can use both to develop techniques that help people who use the techniques make sense of the world. To Carr, Bayes’ theorem and Bayesian inference feel like natural extensions the intuitive ways we humans learn by interactung with the world. In Bayesian inference, you are continually updating your beliefs based on your prior beliefs and new evidence, using tools like Bayes’ theorem.

After talking with Carr about Bayes’ theorem, I am still a tepid appreciator of Bayes’ theorem, but this episode did help me update my priors. A few more positive experiences and I too may become a Bayes enthusiast.