This article was published in Scientific American’s former blog network and reflects the views of the author, not necessarily those of Scientific American

The ideas of AR (augmented reality) and VR (virtual reality) are familiar to almost everyone by now; most people probably think of them in the context of immersive video games. But AR/VR also holds enormous potential for science. There are programs that run on VR headsets that provide a testing ground for surgeons in training, AR microscopes that can detect cancerous cells in real time, and an “AR sandbox” that allows users to create

3-D topographical models to learn about watersheds, levees, earth contours, etc.

At Springer Nature (the parent company of Scientific American), we are looking to our own scientific data and imagining where all that information can take us when given a new life inside AR/VR applications. To that end, we will be hosting our first-ever mixed-reality

On supporting science journalism

If you're enjoying this article, consider supporting our award-winning journalism by subscribing. By purchasing a subscription you are helping to ensure the future of impactful stories about the discoveries and ideas shaping our world today.

Hackathon, November 7–9 in San Francisco, hosted at the Microsoft Reactor. Participants will bring their favorite AR/VR headsets, their laptops and lots of creative energy; Springer Nature will provide access to millions of scientific data points through various APIs. Teams will solve real world problems, visualizing data across the sciences, in categories like Health, Humanitarian Aid, the Environment, an Inclusive World and more.

And while this exciting new world is right at our fingertips today I’d like to give you a sense of how far we’ve come, and where we have yet to go.

THE PAST

Many years ago, in a past life, I was a neurobiologist focusing on how neuronal networks are shaped in the living brain. At the turn of the century I was fortunate enough to end up in the laboratory of Andrew Matus in the Friedrich Miescher Institute in Basel, where they did all kind of cool stuff like live cell imaging. And we saw that the elementary units, where information is passed from one cell to the other, synapses, wiggle around. And subsequently we wondered what basic shape these synapses had, so we went on a quest to find out the structure.

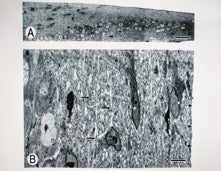

Figure 1. Credit: Martijn Roelandse

And so we zoomed in on a slice of neuronal tissue (Figure 1 A/B) to area with lots of synapses (roughly one micrometer in diameter) using the electron microscope (Figure 2, serial sections through synapse), reconstructed in red in Figure 3).

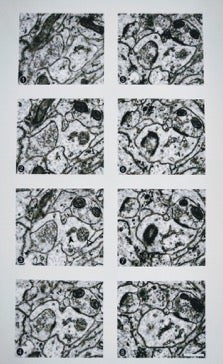

Figure 2. Credit: Martijn Roelandse

Now of course to truly understand the structure of the synapse we had to go beyond the two-dimensional plane which was rather labor-intensive, outlining the synapse area in sequential sections. The traced structures could then be converted to vectors and rendered in an early 3-D viewer. In your Netscape browser, what else.

.jpg?w=224)

Figure 3. Credit: Martijn Roelandse

It was a lot of work to fully understand the morphology of these synapses.

But, later on, software like MetaMorph from Universal Imaging, Imaris from Bitplane or the open source ImageJ made life quite a bit easier, at least for light and fluorescent microscopy. And some smart researchers went for a crowdsourcing approach to this tedious work of outlining all synapse and converted that to Eyewire, a game to map the brain.

Despite all advances, you still had to understand a three-dimensional object (the synapse) from a two-dimensional viewer (your screen).

ENTER VIRTUAL REALITY

With the arrival of the fifth wave of computing, amazing techniques like artificial intelligence, blockchain and virtual reality have become more mainstream, and, subsequently, products have been built that embrace these new kids on the block. ConfocalVR is one of them. These and similar VR applications give science a new dimension and allow researchers to view and share data as never before, as was also noted in a recent

Nature "Toolbox" article. Of course, these applications can go beyond analyzing morphological structures. Engaging with the molecular makeup of proteins using ChimeraX or interacting with large datasets in a geographical context using ODxVD are just two of the early explorations into this space. At Springer Nature we have also been testing new forms of presenting books in order to optimize the time we need to understand and memorize what we have read.

WHAT’S NEXT?

Our VR tests are just the beginning. Springer Nature continues to keep an eye on new technologies that can help our readers interact with scholarly publishing in impactful, engaging ways. One of our favorite ways that we do this is through a recently launched series of Hack Days and Hackathons; at these events, we have seen rapid prototyping of some incredibly imaginative applications that take research data and scholarly publishing to the next level. Previous #SN_HackDays explored challenges in discovery, research data and analytics.

Credit: Martijn Roelandse

During our next Hackathon, we will build from our VR experiments and take a more visual approach to scientific data, inspired by advances in mixed-reality technologies. Hack Day and Hackathon winners usually earn a chance to pitch their prototype to Springer Nature for possible inclusion in our product portfolio plus a number of other great prizes. And the research community gains better visibility into, and engagement with, important data. We look forward to seeing what the future holds.