This article was published in Scientific American’s former blog network and reflects the views of the author, not necessarily those of Scientific American

For 50 years, semiconductor electronics has produced a reduction in cost per transistor of more than 30% per year while doubling the transistors per chip about every two years. This phenomenon has been referred to as Moore’s Law after Gordon Moore, co-founder of both Fairchild Semiconductor and Intel Corporation, who first articulated it at a meeting of the Electrochemical Society in late 1964. Although Moore probably didn’t realize it at the time, Moore’s Law is a special case of the learning curve, which has been well known for over one hundred years. The good news is that it should continue at least as far into the future.

.png?w=540)

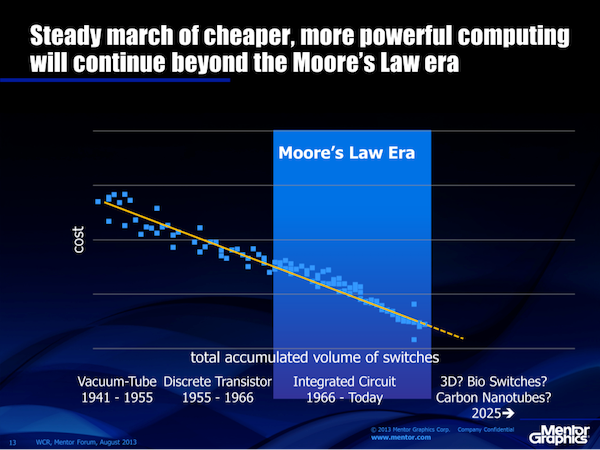

The sustained 30% per year cost reduction in producing transistors is explained by the learning curve. Image courtesy Mentor Graphics.

To understand why, recall that a transistor is simply the latest iteration of the on/off switch at heart of computing. Note in Fig. 2 below how the steadily declining cost per “switch” has been predicted by this log/log plot of cost versus total accumulated volume of switches since mechanical switches were replaced by vacuum tubes and then transistors, ultimately in the form of integrated circuits etched onto silicon chips today. The key point: every time the total accumulated volume doubles, the cost per switch decreases by a fixed percentage (adjusted for inflation).

On supporting science journalism

If you're enjoying this article, consider supporting our award-winning journalism by subscribing. By purchasing a subscription you are helping to ensure the future of impactful stories about the discoveries and ideas shaping our world today.

The learning curve suggests the basics of Moore's Law will continue beyond the era of the integrated circuit. Image courtesy Mentor Graphics.

Think of the learning curve as sitting one rung above Moore’s Law in the taxonomy of theories. The data suggest that the learning curve is a universal phenomenon, as near a law of nature as exists in the world of technology manufacturing and indeed anywhere humans engage in a repeated task. Stated plainly, the learning curve doesn’t care how we achieve the reduction in cost per switch, only that we inevitably do since we always have. While the rate of decrease in the cost per transistor inevitably slows from the 30% per year historical rate as the total accumulated volume becomes very large, nature (or at the very least, history) tells us that the cost reduction must still be achieved but possibly by different means, such as carbon nanotubes, bioelectronics, quantum tunneling, spintronics or something else entirely. As we achieve lower cost, new applications and computer architectures will emerge, accelerating the growth in unit volume and further reducing the cost per switch while growing the total market for semiconductors.

Critically, the learning curve also doesn’t care whether the “switch” is a logic transistor or a memory bit; both of them carry the information to intelligently choose between alternatives. And therein lies the roadmap for semiconductor technology evolution for the next ten to twenty years.

Since 1995, the percentage of transistors used for memory versus logic functions on the most advanced integrated circuits has been increasing. While memory can be embedded on the logic chip — today usually referred to as the system-on-a-chip, or SoC — memory also resides in dedicated memory chips. Increased complexity of logic chips, typically manufactured in silicon foundries, has required more exotic technologies that are slowing the rate of decrease in the cost per transistor. But the memory industry has found new life in the past decade.

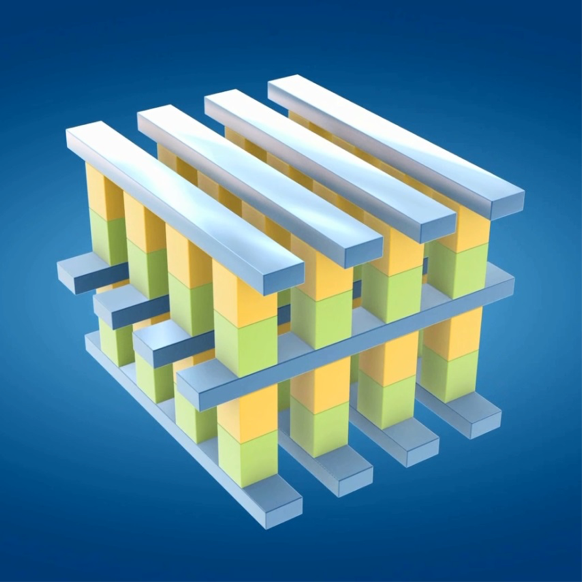

FLASH memories, which are now replacing rotating media for computer storage, are coming down in price per bit at a much faster rate than the price per gate of logic. Multiple bits per memory cell drove that progress for the previous decade but now the driving force is ever more efficient manufacturing technologies that vertically stack 32 or more layers of memory in the same chip. Meanwhile, new memory architectures, such as 3D XPoint announced by Intel and Micron, promise another order of magnitude, or more, in cost reduction, along with performance improvement. This structure also lends itself to vertical stacking. While none of this comes easily, the ratio of 50% logic gates and 50% memory bits manufactured has evolved to 0.4% logic gates and 99.6 % memory bits over the last 20 years and the ratio is still falling in favor of memory, strongly influenced by the massive amount of archival, inactive memory that is required for the growing volumes of video and high resolution photography.

3D Xpoint Technology, announced last summer by Intel and Micron, is ten times denser than conventional memory. Credit: Intel.

The plunging cost of memory points to a few reasonable guesses on future computer configurations: The decrease in the cost per transistor will continue on its long term learning curve but the new electronic equipment applications will find ways to take advantage of lower cost memory bits rather than logic gates. Cognitive memory architectures that function more like our brains can make it easier to capitalize upon the memory bit cost and power reduction. Intelligent sensors for the internet of things, or IoT, can grow their local memory storage for very little cost or incremental power consumption. In China, for example, taxis already attach many solid state imagers to their vehicles so that the continuous video will exonerate them in case of a traffic accident. Security monitors can record and locally store all physical activity near the premises being monitored. Computer architectures will shift more of their problem solving algorithms to memory intensive ones that take advantage of the virtual memory available to them. And for the average person, the amount of local memory will increase dramatically, helping to offset the balance between data communications cost with a remote computer in the cloud and the local storage of information on a cell phone, tablet or desktop computer.

Moore’s Law will indeed become irrelevant to the semiconductor industry. But the remarkable progress in reducing the cost per bit, and cost per switch, will continue indefinitely, thanks to the learning curve. And with it, the number of new applications for electronics will continue to be limited only by the creativity of those who search for solutions to new problems.