This article was published in Scientific American’s former blog network and reflects the views of the author, not necessarily those of Scientific American

My last post, “Is Science Hitting a Wall?,” provoked lots of reactions. Some readers sent me other writings about diminishing returns from research. One is “Diagnosing the decline in pharmaceutical R&D efficiency,” published in Nature Reviews Drug Discovery in 2012. The paper is so clever, loaded with ideas and relevant to science as a whole that I’m summarizing its main points here.

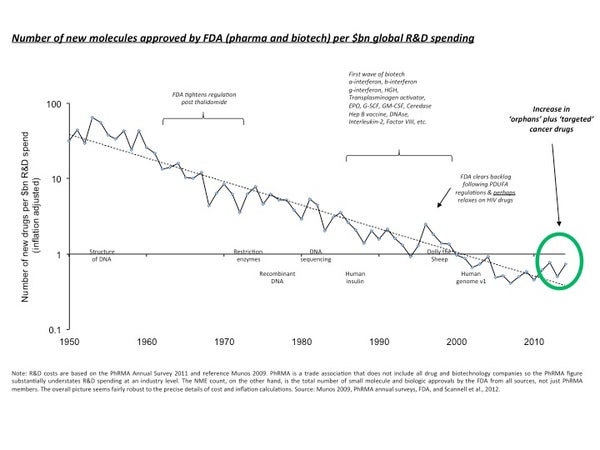

Eroom’s Law. The paper notes that “the number of new drugs approved per billion U.S. dollars spent on R&D has halved roughly every 9 years since 1950.” The authors, Jack Scannell and three other British investment analysts, call this trend “Eroom’s Law,” which is Moore’s Law flipped over. Moore’s Law is Gordon’s Moore’s famous observation about the growing power of computer chips.

Eroom’s Law might hold for many fields other than drug development. As my previous column notes, Eroom’s Law holds even for computer chips, because upholding Moore’s Law has required more and more resources. Scannell et al identify four factors underpinning Eroom’s Law. Here they are, with brief explanations:

On supporting science journalism

If you're enjoying this article, consider supporting our award-winning journalism by subscribing. By purchasing a subscription you are helping to ensure the future of impactful stories about the discoveries and ideas shaping our world today.

The better than the Beatles problem. “Imagine how hard it would be,” Scannell’s group writes, “to achieve commercial success with new pop songs if any song had to be better than the Beatles, if the entire Beatles catalogue was available for free, and if people did not get bored with old Beatles records.”

Researchers seeking new drugs face a similar situation. “Yesterday’s blockbuster is today’s generic. An ever-improving back catalogue of approved medicines increases the complexity of the development process for new drugs, and raises the evidential hurdles for approval, adoption and reimbursement.” The authors call this problem “progressive and intractable.”

The “better than the Beatles problem” has an equivalent in pure science. Call it the “better than Einstein problem.” Ambitious scientists don’t want merely to tweak or extend science’s greatest hits. They want to come up with revolutionary insights of their own, which might even show that older paradigms were incomplete or wrong. This feat is extremely difficult, because science’s greatest hits are not just aesthetically pleasing, like “Yesterday” or “A Day in a Life.” General relativity, quantum mechanics, the big bang theory, evolutionary theory and the genetic code are true, in the sense of being confirmed by mountains of evidence. That’s one reason why there will probably never be another Einstein.

The cautious regulator problem. Problems like the Thalidomide scandal in the late 1950s led to stricter regulation of drug development. “Progressive lowering of the risk tolerance of drug regulatory agencies obviously raises the bar for new drugs, and could substantially increase the associated costs of R&D,” Scannell et al comment. “Each real or perceived sin by the industry, or genuine drug misfortune, leads to a tightening of the regulatory ratchet.”

Scannell et al state that “it is hard to see the regulatory environment relaxing to any extent.” They could not foresee Trump, who has called for rolling back FDA restrictions on drug firms. But so far Trump’s FDA commissioner, Scott Gottlieb, has not pursued deregulation as aggressively as some critics feared.

Ethical constraints impede research in other fields, notably neuroscience. Modern neuroscientists could undoubtedly learn a lot from brain-implant experiments like those carried out in the 1950s and 1960s. (See for example “Tribute to Jose Delgado, Legendary and Slightly Scary Pioneer of Mind Control,” and “Bizarre Brain-Implant Experiment Sought to ‘Cure’ Homosexuality.”) Brain-implant research continues, and occasionally goes awry. But experimentation on humans and other animals is much more tightly regulated than it used to be, fortunately.

The throw money at it tendency. Many companies have responded to competition by “adding human resources and other resources to R&D,” the authors note. They add that there may be “a bias in large companies to equate professional success with the size of one’s budget.”

Investors and managers are now questioning the throw money at it tendency and seeking to slash R&D costs, according to Scannell et al. They add: “The risk, however, is that the lack of understanding of factors affecting return on R&D investment that contributed to relatively indiscriminate spending during the good times could mean that cost-cutting is similarly indiscriminate. Costs may go down, without resulting in a substantial increase in efficiency.”

Stanislaw Lem’s science-fiction classic His Master’s Voice, originally published in Polish in 1968, alludes to the throw money at it tendency. The novel’s narrator is a mathematician working on a government-funded project to decode an extraterrestrial message. He notes that officials overseeing the project assume that “if one man dug a hole with a volume of one cubic meter in ten hours, then a hundred thousand diggers of holes could do the job in a fraction of a second… The thought that our guardians were people who held that a problem that five experts were unable to solve could surely be taken care of by five thousand was hair-raising.”

The basic-research-brute force bias. This is the subtlest factor identified by Scannell et al. They define it as “the tendency to overestimate the ability of advances in basic research (particularly in molecular biology) and brute force screening methods (embodied in the first few steps of the standard discovery and preclinical research process) to increase the probability that a molecule will be safe and effective in clinical trials.”

Drug research has been transformed over the past few decades by advances such as the discovery of the double helix and of neurotransmitters, as well as the invention of powerful tools for decoding genomes and screening compounds. Pharmaceutical research based on these advances has been touted as more rational and efficient than the intuitive, hit-or-miss guesswork of the past.

But the clinical payoff from “molecular reductionism” has been overrated. Look at the failure, so far, of the Human Genome Project to translate into improved therapies for inherited illnesses, or of knowledge about neurotransmitters to produce better psychiatric medications.

Chemist Ashutosh Jogalekar, who blogs as Curious Wave Function, commented on the basic-research-brute force bias in a typically incisive post on the Eroom’s Law paper in 2012. He notes that as we “constrain ourselves to accurate, narrowly defined features of biological systems, it deflects our attention from the less accurate but broader and more relevant features. The lesson here is simple; we are turning into the guy who looks for his keys under the street light only because it's easier to see there.”

Another name for the basic-research-brute force bias could be the devil is in the details problem. Nuclear physics has fallen prey to this problem. The discovery of nuclear fusion in the 1930s and invention of thermonuclear weapons in the 1950s led physicists to expect that fusion could quickly be harnessed for generating energy. More than 70 years later, those expectations remain unfulfilled.

Appoint Dead Drugs Officers. Scannell et al propose that to counter Eroom’s Law, drug firms should appoint a “Dead Drug Officer” to perform a post mortem on drugs that fail the R&D process. The officer would submit reports to the firm as well as to funding agencies such as the NSF or NIH and a peer-reviewed journal. These dead-drug reports would help identify ways to make research more productive.

Scannell et al are essentially proposing that science be more accountable. This is the theme of “Saving Science,” a controversial 2016 essay by science-policy scholar Daniel Sarewitz. He argues that science “is trapped in a self-destructive vortex; to escape, it will have to abdicate its protected political status and embrace both its limits and its accountability to the rest of society.” See responses to the essay here.

I can imagine other fields designating a Dead Ideas Officer to improve efficiency, except that in some fields ideas never die. Look, for example, at the persistence of Freudian psychoanalysis in psychology and of string theory in physics. The Dead Ideas Officer could perhaps issue recommendations as to which ideas should be dead and hence cut off from further investment. That would be a thankless job, but someone has to do it, for science’s sake.

Further Reading:

Why There Will Never Be Another Einstein

Was I Wrong about “The End of Science”?

A Dig Through Old Files Reminds Me Why I’m So Critical of Science

Study Reveals Amazing Surge in Scientific Hype

Sorry, But So Far War on Cancer Has Been a Bust

Has the Era of Gene Therapy Finally Arrived?

What's So Great about Innovation?

See also this 2015 paper by Scannell, a 2016 paper by Scannell and a co-author and a non-technical commentary on that paper.